Young people today live in rich, fast moving digital environments where their online identity is deeply tied to friendships, belonging, and self expression. But the same spaces introduce threats they cannot always understand, such as privacy breaches, coercion, harassment, or account misuse.

“I know something could go wrong, but I don’t know what to do if it happens.”

Existing cybersecurity tools feel technical, authoritative, and overwhelming. And when the situation involves police or formal reporting, most teenagers feel intimidated and avoid seeking help, even when they urgently need it.

From interviews and workshops, three strong tensions emerged. These insights shaped the tone and structure of the service.

Security as empowerment not restriction. To build trust, we designed around three core principles:

Cova.os is a companion system that helps teenagers understand digital risk, reflect on what they’re experiencing, and seek the right help without fear or overwhelm.

Onboarding introduces the system as a peer-aligned guide, not a monitoring tool.

The risk visualisation dashboard translates complex threat signals into clear emotional language.

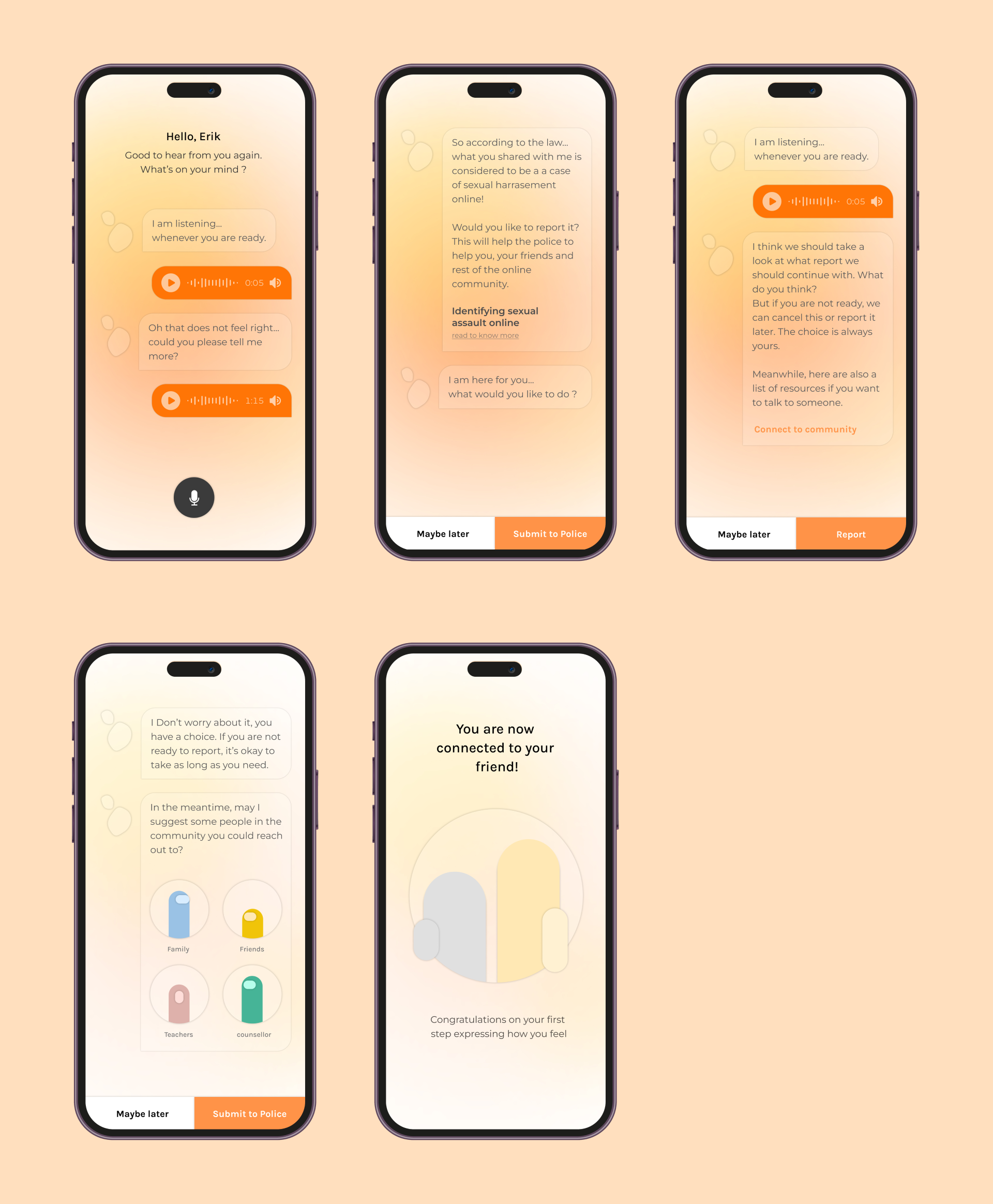

Conversation-based reporting allows users to express what happened in their own tone and pace.

When necessary, gentle, choice driven escalation connects them to trusted adults or local authorities, always with emotional support first.

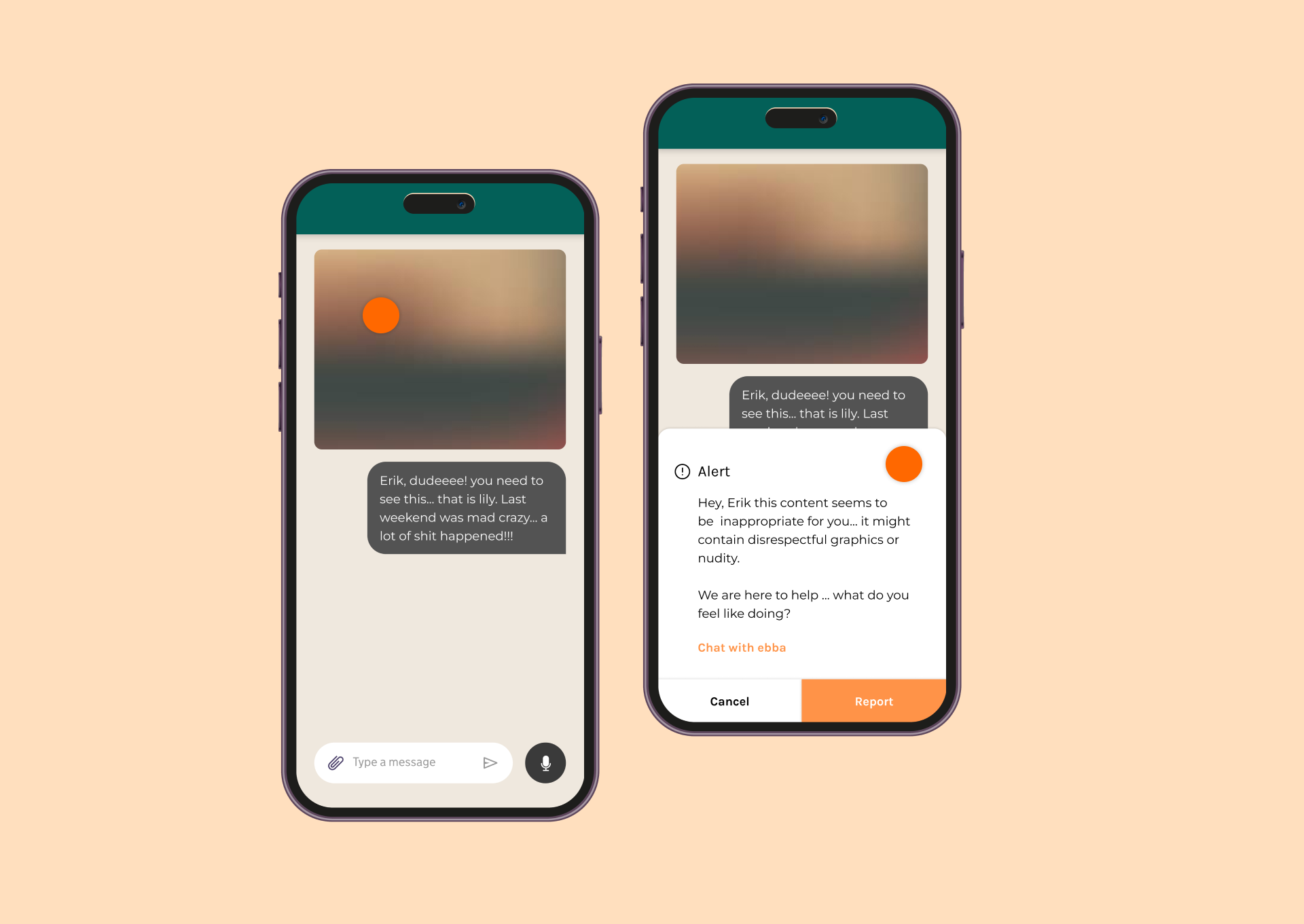

Cova detects an inappropriate image and automatically blurs it.

Teens often hesitate to ask for help online. Through empathetic language and tone, Ebba normalizes help-seeking, gently prompting users to lean on trusted people and grow stronger through connection.

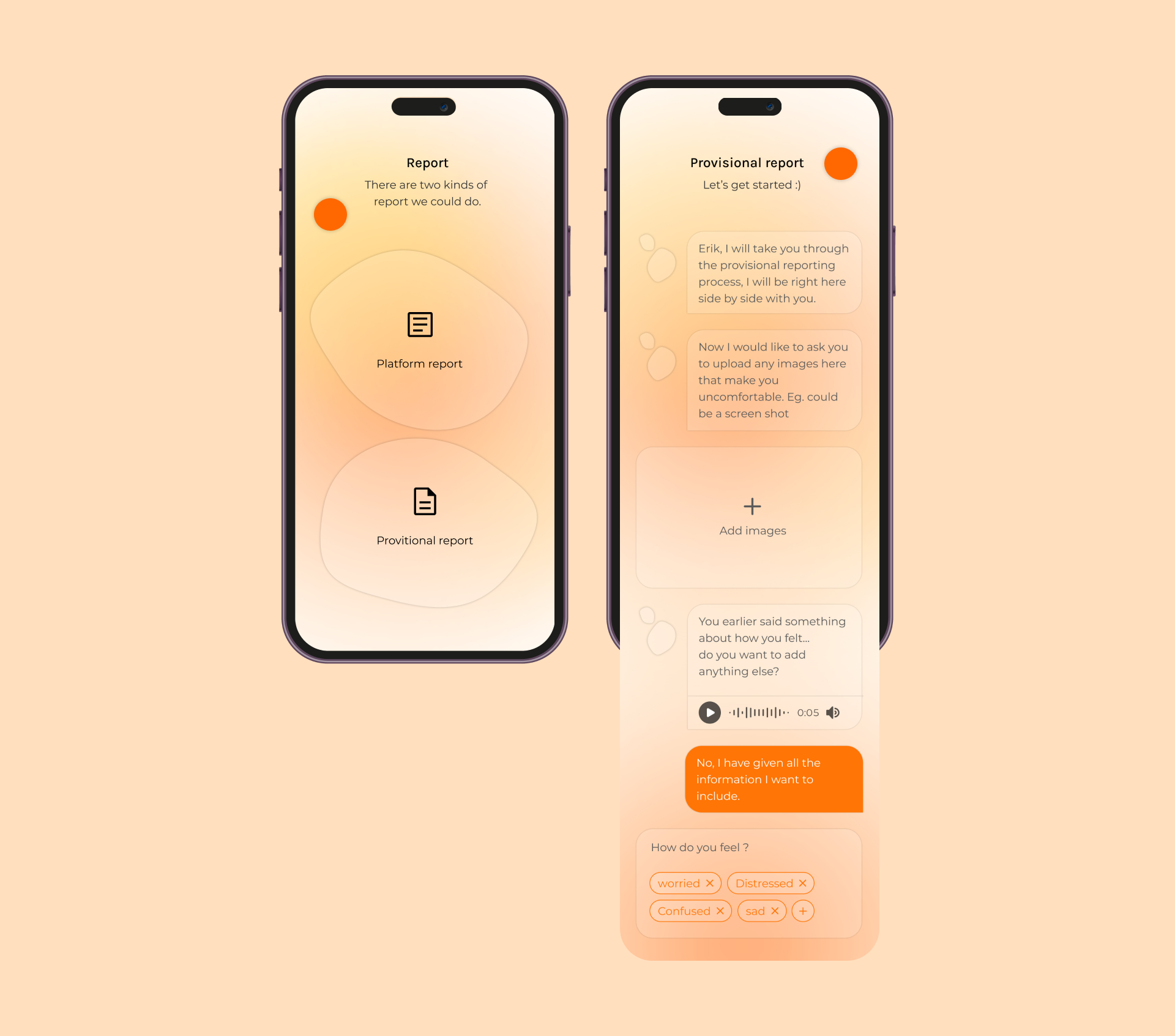

Platform Report lets them flag online threats directly where

they happen, making reporting feel natural and immediate.

Provisional Report allows quick sharing with law enforcement, helping reveal online hotspots and patterns of risk.

Cova thanks the user for helping identify digital threat hotspots and strengthen online safety by reporting the incident.

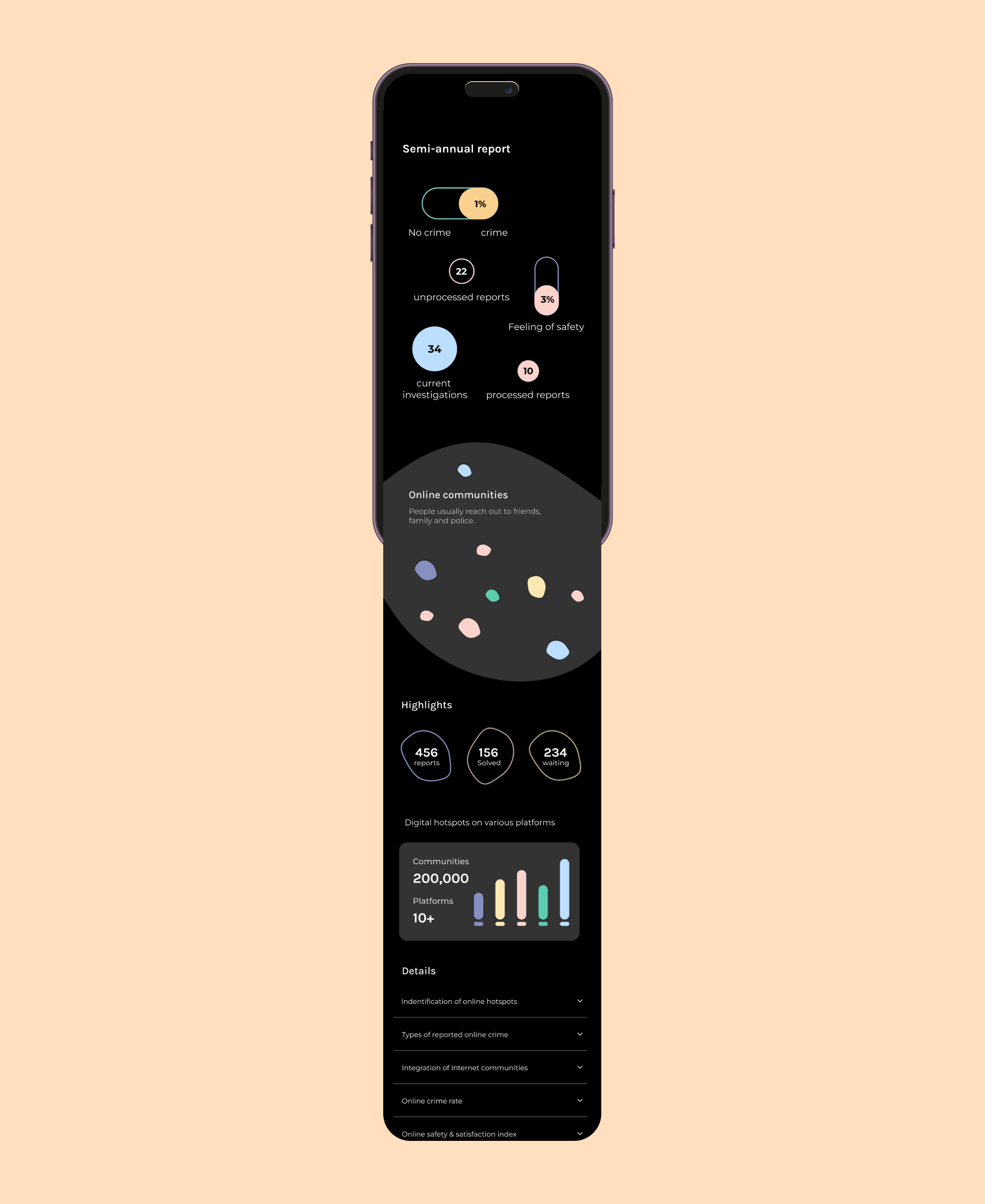

Every six months, the police receive a digital report summarizing reported, resolved, and pending online crimes. These updates help them track emerging digital hotspots, stay vigilant, and act quickly when needed.

“This feels like something I could actually use. I wouldn’t hesitate, it’s not scary, and it doesn’t make it bigger than it is.”

While exploratory, the project demonstrated how cybersecurity can shift from fear-based messaging

to shared care and self-respect in digital identity.

It helped define a service model where:

This was not just a UI challenge,

it required navigating emotion, responsibility, and power dynamics.

• Led UX research and synthesized behavioral insights

• Designed and facilitated co-creation workshops with teenagers

• Created the interaction model and UI system for the solution

• Collaborated with social workers and law enforcement representatives

This project taught me how to design in spaces where stakes are emotional, social, and relational, not just functional.

I learned to balance support and agency, especially when involving both young people and police,

where trust and tone are everything.